Best Crypto to Watch in 2026, Experts Compare

Dubai, UAE, February 9, 2026

The digital asset market in 2026 is moving away from pure hype and toward actual utility. Many investors are currently looking for the best crypto way to allocate a $1,000 budget before the next major altcoin market rotation. While the famous names of the past still hold significant market share, their growth potential is being challenged by high valuations and structural resistance. As the second quarter of 2026 approaches, the search for the next breakout star is leading away from the top ten and toward emerging protocols that offer more than just a famous brand.

Shiba Inu (SHIB)

Shiba Inu (SHIB) remains a dominant force in the meme coin sector, but its price action in early 2026 has been underwhelming. Currently trading near $0.0000058, the token holds a market capitalization of roughly $3.5 billion. Despite the launch of various ecosystem updates, the sheer size of its circulating supply continues to be a major hurdle for significant price moves.

Technically, SHIB is facing a heavy resistance zone between $0.0000069 and $0.0000072. Every attempt to break this level has been met with intense selling pressure. Some analysts have issued a bearish outlook for the year, suggesting that if SHIB fails to hold its current support at $0.0000051, it could slide further toward $0.0000032. This would represent a decline of nearly 45%, making it a risky choice for those seeking high growth in 2026.

Ripple (XRP)

Ripple (XRP) is currently navigating a period of high volatility after failing to maintain its early January highs. The token is trading around $1.40 with a market capitalization of approximately $85 billion. While it remains a key player in institutional finance, its massive valuation means it requires billions in new capital just to move the price by a small percentage.

The primary resistance zones for XRP are clustered around $1.44 and $1.60. The market is also dealing with monthly escrow unlocks that add hundreds of millions of tokens to the supply, creating a constant “sell wall.”

Some pessimistic forecasts project that XRP could drop to as low as $0.50 or even $0.27 by late 2026 if broader market liquidity continues to tighten. For a $1,000 investor, the chance of a return on such a large asset is becoming statistically unlikely.

Mutuum Finance (MUTM)

While legacy assets continue to face strong resistance, Mutuum Finance (MUTM) is carving out a new direction focused on practical lending use cases. The protocol is a non-custodial lending system built with Layer 2 efficiency in mind, aiming to keep transactions fast and low-cost. Its structure supports both automated pool-based activity and direct user agreements through a dual-market design that includes Peer-to-Contract (P2C) pools and Peer-to-Peer (P2P) lending.

A key milestone has already been reached with the V1 protocol now live on the Sepolia testnet. This live test environment allows users to interact with real lending features using major assets such as ETH, USDT, WBTC, and LINK. Participants can supply liquidity, mint yield-bearing mtTokens, monitor loan health factors, and observe how automated liquidations work in real time.

Alongside this progress, the presale is currently in Phase 7, with MUTM priced at $0.04. With more than $20.4 million raised and over 19,000 holders, momentum is building as the project moves closer to its confirmed $0.06 launch price.

The $1,000 Growth Potential

The reason experts are highlighting MUTM over legacy assets is its aggressive growth model. The protocol’s whitepaper features a buy-and-distribute mechanism that uses a portion of all platform fees to purchase tokens from the open market. These tokens are then given to users who stake in the system, creating a sustainable link between the platform’s actual usage and the token’s value.

A $1,000 investment in Mutuum Finance at the current $0.04 price secures 25,000 tokens. By the $0.06 launch price alone, the value grows to $1,500. If the project reaches the analyst target of $0.40 by the end of 2026, that $1,000 could evolve

The protocol recently cleared a security audit by Halborn and is currently live on the Sepolia testnet. It also maintains a high transparency score from CertiK. The economic model includes a buy-and-distribute mechanism that uses platform fees to purchase tokens from the open market for distribution to stakers. Plans for a native stablecoin and Layer-2 integration aim to further reduce costs and increase total value locked.

This increase is a realistic bullish scenario based on the protocol’s small initial market cap and its Layer 2 expansion plans. As Phase 7 sells out quickly, the window to secure MUTM at a 50% discount relative to the launch price is closing fast.

For more information about Mutuum Finance (MUTM) visit the links below:

Website: https://www.mutuum.com

Linktree: https://linktr.ee/mutuumfinance

Disclaimer:

This article is for informational purposes only and does not constitute financial advice. Cryptocurrency investments carry risk, including total loss of capital. Readers should conduct independent research and consult licensed advisors before making any financial decisions.

All market analysis and token data are for informational purposes only and do not constitute financial advice. Readers should conduct independent research and consult licensed advisors before investing.

Crypto Press Release Distribution by BTCPressWire.com

Ripple (XRP) Whales Add This New Crypto Ahead of Q2 2026, Here’s Why

Dubai, UAE, February 9, 2026

Large holders are starting to reposition as Q2 2026 approaches, and on-chain data suggests that Ripple (XRP) whales are no longer focused on a single asset. With XRP facing slower momentum near key resistance levels, attention is shifting toward newer crypto projects that offer clear utility and early-stage growth potential. Analysts note that this rotation is driven by fundamentals, not hype, as whales look for scalable platforms, active development, and strong adoption signals. One cheap crypto is now standing out as a preferred accumulation target ahead of the next crypto market phase.

Ripple (XRP)

Ripple (XRP) remains a central figure in the global payment space but it is facing a difficult technical landscape in early 2026. The token is currently trading near $1.40 with a market capitalization of approximately $86 billion. While it continues to facilitate cross-border settlements, the asset is struggling to reclaim its previous yearly highs. Analysts note that XRP is stuck in a heavy consolidation phase. It is currently battling several layers of resistance that have proven difficult to break throughout the first quarter.

The primary resistance zones for Ripple (XRP) are located at $1.45 and $1.75. These levels are crowded with sell orders from long term holders looking to exit their positions. Furthermore, a major pattern on the daily chart has increased the bearish pressure. If the token fails to hold the $1.15 support level, experts warn of a potential slide back toward the $0.95 mark. This technical weakness is one of the main reasons why large holders are starting to diversify into newer assets with more immediate growth potential.

Mutuum Finance (MUTM)

Mutuum Finance (MUTM) is a decentralized lending and borrowing protocol built to meet the high-speed demands of the 2026 market. By using Layer 2 infrastructure, it aims to keep transactions fast and costs low for everyday users. The protocol follows a dual-market design. Users can access Peer-to-Contract (P2C) pools for automated yield or use Peer-to-Peer (P2P) markets to set custom loan terms directly.

In the P2C pools, returns are variable and depend on borrowing demand. For example, if a USDT pool offers a 6% to 10% annual yield, a user supplying $5,000 could earn between $300 and $500 over a year, assuming similar conditions. This structure gives lenders passive income options while offering borrowers quick access to liquidity under transparent rules.

The growth of Mutuum Finance (MUTM) has been remarkable since its launch in early 2025. The project has already raised over $20.5 million and secured a community of more than 19,000 holders. Currently, the presale is in Phase 7 with the token priced at $0.04. This follows a steady climb from its initial starting price of $0.01. With an official launch price set at $0.06, those participating in the current phase are looking at a strategic entry point before the token hits major global exchanges.

Contrasting Price Predictions for Q2 2026

When comparing Ripple (XRP) and Mutuum Finance (MUTM), the growth trajectories look very different. For Ripple (XRP), many analysts have a cautious outlook for the middle of 2026. Because of its massive market cap and the heavy resistance at $1.50, the realistic upside is limited.

Some experts predict that XRP will stay range bound between $1.00 and $1.30 for the next several months. The sheer amount of new capital required to double its price makes it a slow mover in a fast market.

In contrast, the price prediction for Mutuum Finance (MUTM) is much more bullish. Analysts point to the project’s small initial valuation and its high utility as primary drivers. Once the protocol moves from the testnet to the mainnet, the demand for the native token is expected to surge.

Some market experts suggest that MUTM could reach a target of $0.40 to $0.50 by the end of 2026. This would represent an increase of more from its current presale price. This growth is backed by the protocol’s buy and distribute model which uses platform fees to support the token value.

Security Integrity and Whale Accumulation

A major factor in why Ripple (XRP) whales are moving into Mutuum Finance (MUTM) is the project’s commitment to safety. The protocol has successfully completed a full security audit by Halborn. This is one of the most respected firms in the world.

This audit gives large investors the peace of mind they need to commit significant capital. Furthermore, the MUTM token holds a high transparency score on several tracking platforms, proving that the team is focused on long term reliability.

The project also features a 24 hour leaderboard on its dashboard. This tool allows the community to see the largest contributions in real time. Recently, this board has shown several entries exceeding $100,000, which are typical signs of whale activity. These large players are taking advantage of the current 50% discount relative to the launch price.

As the market moves toward Q2 2026, the rotation of capital is becoming clear. While Ripple (XRP) remains a staple of the industry, its growth has slowed down. This has created a gap that Mutuum Finance (MUTM) is quickly filling. With a working testnet, audited code, and a rapidly selling presale, the project is positioning itself as a top altcoin choice for those looking for the next breakout crypto.

For more information about Mutuum Finance (MUTM) visit the links below:

Website: https://www.mutuum.com

Linktree: https://linktr.ee/mutuumfinance

Disclaimer:

This article is for informational purposes only and does not constitute financial advice. Cryptocurrency investments carry risk, including total loss of capital. Readers should conduct independent research and consult licensed advisors before making any financial decisions.

All market analysis and token data are for informational purposes only and do not constitute financial advice. Readers should conduct independent research and consult licensed advisors before investing.

Crypto Press Release Distribution by BTCPressWire.com

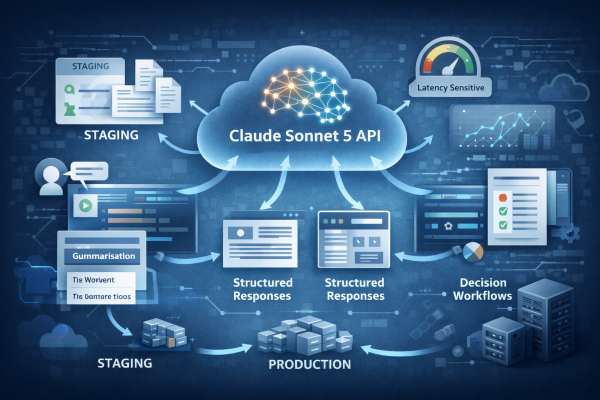

Fine-tuning vs RAG vs Prompt Engineering: the fastest way to choose the right approach

If you’re trying to ship something real, not just a demo, this is the decision that keeps teams stuck: Do we fix answers with better prompts, ground the model with RAG, or train it with fine-tuning? When you choose the wrong lever, you feel it immediately: responses sound confident but miss policy details, outputs change every run, and stakeholders start asking the questions you can’t dodge, “Where did this come from?” “How do we keep it updated?” “Can we trust it in production?”

This guide is built the way SMEs make the call in real projects: decision first, trade-offs second. In the next few minutes, you’ll know exactly when to use prompt engineering, when RAG is non-negotiable, when fine-tuning actually pays off, and when the best answer is a hybrid approach.

Choose like this (60-second decision guide)

The fastest way to choose

Choose RAG if your pain is: “It must be correct and provable”

Pick Retrieval-Augmented Generation (RAG) when:

- Your answers must come from your internal docs (policies, SOPs, product manuals, contracts, knowledge base)

- Your content changes weekly/monthly and you need updates without retraining

- People will ask: “Where did this answer come from?”

- The biggest risk is hallucination + missing critical details

If your end users need source-backed answers or current information, RAG is your default.

Real-world example pain: “Support agents can’t use answers unless they can open the policy link and confirm it.”

Choose Fine-tuning if your pain is: “The output must be consistent every time”

Pick Fine-tuning when:

- You need a repeatable style, format, or behavior (structured JSON, classification labels, specific tone, regulated wording)

- You’ve already tried prompting and still get inconsistent outputs

- Your use case is more “do the task this way” than “look up this knowledge”

- You have good example data (high-quality inputs → desired outputs)

If the pain is “the model doesn’t follow our expected pattern,” fine-tuning is how you train behavior.

Real-world example pain: “The model writes decent summaries, but every team gets a different structure, and QA can’t validate it.”

Choose Prompt Engineering if your pain is: “We need results fast with minimal engineering”

Pick Prompt Engineering when:

- You’re in early exploration or PoC and need speed

- Your content is small, stable, or not dependent on private docs

- You mainly need better instructions, constraints, examples, and tone

- You want the quickest route to “good enough” before investing in architecture

If the goal is fast improvement with low engineering effort, start with prompting.

Real-world example pain: “We don’t even know the right workflow yet, we need something usable this week.”

The 60-second decision matrix (use this like a checklist)

Ask these five questions. Your answers point to the right approach immediately:

Does the answer need to be based on internal documents or changing info?

- Yes → RAG

- No → go to next questionDo users need source links / citations to trust the output?

- Yes → RAG

- No → go to next questionDo you need outputs in a strict format every time (JSON, labels, standard template)?

- Yes → Fine-tuning (often with lightweight prompting too)

- No → go to next questionDo you have high-quality examples of “input → perfect output”?

- Yes → Fine-tuning is worth considering

- No → Prompting or RAG (depending on whether knowledge grounding is needed)Is speed more important than perfection right now?

- Yes → start with Prompt Engineering

- No → choose based on correctness vs consistency:

- correctness + traceability → RAG

- consistent behavior → Fine-tuning

Quick “pick this, not that”

- If your pain is wrong answers, don’t jump to fine-tuning first, start with RAG so the model is grounded in real sources.

- If your pain is inconsistent formatting, RAG alone won’t fix it, consider fine-tuning (or at least structured prompting) to stabilize outputs.

- If your pain is you need something working now, start with prompt engineering, but don’t pretend that prompts alone will solve deep doc accuracy or governance issues at scale.

Prompt Engineering vs RAG vs Fine-Tuning, the trade-offs that matter in production

Which approach removes the risk that’s hurting us right now? Here are the production trade-offs that actually decide it.

1) Freshness and update speed

- Prompt engineering: Great when the knowledge is small or stable. But if your policies/pricing/SOPs change, you’ll keep chasing prompts.

- RAG: Built for change. Update the knowledge source → answers can reflect the latest content.

- Fine-tuning: Not meant for frequent knowledge updates. It’s for teaching patterns/behavior, not “today’s version of the handbook.”

2) Trust and traceability

- Prompt engineering: Can sound convincing without being verifiable.

- RAG: Can return answers tied to retrieved context (and you can show “what it used”).

- Fine-tuning: Typically improves how it responds, but it doesn’t naturally give “here’s the source document” behavior unless you still add retrieval or explicit citation logic.

3) Output consistency (format, tone, structure)

- Prompt engineering: Can get close, but consistency often breaks under edge cases.

- RAG: Improves correctness with grounding, but it doesn’t guarantee strict formatting by itself.

- Fine-tuning: Best lever for consistent behavior, structured outputs, classification labels, tone guidelines, domain-specific response patterns.

4) Cost and latency (what you’ll feel at scale)

- Prompt engineering: Lowest setup cost, fastest to iterate.

- RAG: Adds retrieval steps (vector search, re-ranking, larger context). That can increase latency and token usage.

- Fine-tuning: Upfront training cost + ongoing lifecycle cost (versioning, evals, monitoring), but can reduce runtime prompt length in some cases.

5) Operational burden (who maintains it)

- Prompt engineering: Mostly prompt iteration and guardrails.

- RAG: You now own ingestion, chunking, embeddings, access control, retrieval evaluation, refresh cadence.

- Fine-tuning: You now own training data quality, evaluation sets, regression testing, model updates, rollback plans.

When to use each method (real scenarios)

Use Prompt Engineering when…

You need a quick lift without adding new infrastructure.

Choose prompting if:

- You’re early in exploration or a PoC and need value fast

- The task is mostly about instructions, tone, constraints, and examples

- Your answers don’t depend heavily on private or frequently changing internal docs

- You can tolerate some variability as long as it’s “good enough”

Avoid relying on prompting alone if:

- People keep saying “that sounds right, but it’s not”

- The same question gets noticeably different answers

- You’re spending more time patching prompts than improving outcomes

Use RAG when…

Accuracy must be grounded in your knowledge, and your knowledge changes.

Choose RAG if:

- The assistant must answer from policies, SOPs, manuals, tickets, product docs, contracts, or internal knowledge bases

- You need responses to reflect the latest updates without retraining

- You want answers that can be validated (ex: show the source snippet or link)

- Your biggest risk is incorrect answers causing operational or compliance issues

Avoid using RAG as a “magic fix” if:

- Your content is messy, duplicated, outdated, or access isn’t controlled

- Users ask broad questions but your documents don’t contain clear answers

- Retrieval isn’t tuned and the system pulls irrelevant chunks (this makes answers worse, not better)

Use Fine-Tuning when…

The problem is behavior and consistency, not missing knowledge.

Choose fine-tuning if:

- You need outputs in a reliable format every time (JSON, tables, labels, strict templates)

- You want a consistent tone and style across teams and channels

- You have good examples of what “perfect output” looks like

- The model needs to learn patterns that prompting doesn’t stabilize (edge cases, domain phrasing, workflow-specific responses)

Avoid fine-tuning if:

- Your main problem is “it doesn’t know our latest information”

- Your content changes often and you expect the model to “stay updated”

- You don’t have clean examples (poor data leads to poor tuning)

A quick way to map your use case (pick the closest match)

- Internal policy / SOP assistant → RAG

- Customer support knowledge assistant → RAG (often with strong guardrails)

- Summaries that must follow a strict template → Fine-tuning (or structured prompting first)

- Classification / routing (tags, categories, intents) → Fine-tuning

- Marketing copy variations / email drafts → Prompting first

- Frequently changing product/pricing details → RAG

Next section will cover what many teams end up doing in practice: combining approaches (hybrids) so you get both grounding and consistency.

Section 5: The common winning approach , combine them

In real builds, it’s rarely “only prompting” or “only RAG” or “only fine-tuning.” The best results come from layering methods so each one covers what the others can’t.

Pattern 1: Prompting + RAG (most common baseline)

Use this when you want:

- Source-grounded answers (from your documents)

- Plus better instruction control (tone, formatting, refusal rules, step-by-step reasoning)

How it works in practice:

- RAG supplies the right context (policies, KB articles, product docs)

- Prompting tells the model how to use that context (answer style, what to do when info is missing, how to cite)

Best for: internal assistants, support copilots, policy Q&A, onboarding knowledge bots.

Pattern 2: RAG + Fine-tuning (accuracy + consistency)

Use this when you want:

- Answers grounded in your knowledge and

- Outputs that are predictable and structured

How it works in practice:

- RAG handles “what’s true right now” (freshness + traceability)

- Fine-tuning stabilizes “how to respond” (format, tone, classification labels, consistent steps)

Best for: regulated workflows, structured summaries, form filling, ticket triage, report generation where consistency matters.

Pattern 3: Prompting + Fine-tuning (behavior-first systems)

Use this when you want:

- The model to behave in a specific way (style, structure, decision logic)

- And you don’t need heavy document grounding

How it works in practice:

- Fine-tuning teaches the response patterns

- Prompting still handles task instructions, constraints, and guardrails

Best for: classification/routing, standardized communication outputs, templated writing, workflow assistants with stable knowledge.

Implementation checklist (what to plan before you build)

This is the part that saves you from “it worked in testing” surprises. Use the checklist below based on the approach you picked.

If you’re using Prompt Engineering

Cover:

- Define the job clearly (what the assistant must do vs must never do)

- Add a small set of good examples (2–6) that show the exact output style you want

- Put guardrails in the prompt:

- “If you don’t know, say you don’t know”

- “Ask a clarifying question when required”

- “Follow the format exactly”

- Create a tiny test set (20–30 real questions) and re-run it after each prompt change

Watch-outs:

- If results change a lot across runs, you’ll need stricter structure (schemas) or a stronger approach (RAG/fine-tuning).

If you’re using RAG

Cover:

- Decide what content goes into retrieval:

- final policies, approved docs, KB articles, FAQs (avoid outdated drafts)

- Set a refresh plan:

- how often docs update, who owns updates, and how quickly answers must reflect changes

- Get retrieval basics right:

- chunking that matches how people ask questions

- embeddings for semantic search

- retrieval evaluation (test queries where the “right chunk” is known)

- Control access:

- ensure users only retrieve what they’re allowed to see

- Handle “no good context found”:

- return a safe answer, request more detail, or route to a human

Watch-outs:

- Bad or messy content produces bad answers, cleaning and scoping data matters as much as the model.

If you’re using Fine-Tuning

Cover:

- Collect high-quality examples:

- real inputs → ideal outputs (clean, consistent, representative)

- Split data properly:

- training vs validation vs a locked “gold” test set

- Define what you’re optimizing:

- format accuracy, classification accuracy, tone adherence, step correctness, etc.

- Add regression testing:

- test before/after tuning so you don’t improve one area and break another

- Plan versioning and rollback:

- keep track of model versions and be able to revert quickly

Watch-outs:

- Fine-tuning won’t keep facts updated, if knowledge changes often, pair it with RAG.

Final pre-launch sanity check (for any approach)

Before you call it “production-ready,” confirm:

- It handles common questions correctly (not just happy paths)

- It fails safely when context is missing or unclear

- You can measure quality over time (feedback + basic monitoring)

- You know who owns updates (prompts, retrieval content, or tuned model)

Map your choice into Generative AI Architecture

Now that you’ve picked the right lever (prompting, RAG, fine-tuning, or a hybrid), the next question is: how do you run it reliably at scale? That’s where Generative AI Architecture comes in, because real systems need more than a model call.

Your architecture is where you decide:

- how requests flow through your app and data sources

- how you enforce access control and safety rules

- how you evaluate output quality and monitor drift over time

- how you manage cost, latency, and version changes without breaking users

Company Details

Alex Passler: Why This Former WeWork Executive Believes Genuine Hospitality Is Flex Workspace’s Next Competitive Edge

In an era where office attendance has become optional rather than obligatory, Alex Passler is betting that hospitality – real hospitality, not just the marketing buzzword – will separate winning flexible workspaces from those simply filling desks.

One month into operations at Vallist‘s inaugural London location, Finlaison House in Holborn, early results suggest he might be right. But Passler’s interpretation of hospitality looks nothing like the community-focused, high-energy environments that defined the previous generation of flex space.

“Most flex spaces hit you with noise – both visually and acoustically,” Passler explains. “At Finlaison House, the first impression is deliberately restrained. You feel the quality of materials, the acoustic separation, the natural light. It feels closer to a private members’ building than a flexible workspace.”

From WeWork to Vallist: A Different Philosophy

Passler’s perspective comes from a unique vantage point. As former Head of WeWork Asia Pacific and The Americas Real Estate teams, he witnessed firsthand what happens when growth and scale override operational fundamentals.

“The biggest lesson was that a flexible workspace only works when it’s built for the long term,” Passler reflects. “At WeWork, the product was compelling, but the model often prioritized speed and scale over durability – financially, operationally, and architecturally.”

At Vallist, the approach inverts that formula entirely. By partnering directly with landlords through management agreements rather than taking on lease risk, the pressure to chase short-term occupancy disappears. “We’re focused on building value into the asset, not just filling desks,” Passler says. “That allows us to invest properly in design, soundproofing, technology, and service – and to operate with patience rather than pressure.”

What Hospitality Actually Means

Luigi Ambrosio, Vallist’s Head of Operations, pushes back against the industry’s casual use of “hospitality-led” as mere rebranding of what used to be called “community.”

“It’s real care for people,” Ambrosio emphasizes. “When someone opens a door, you don’t know what is happening before they enter. There is a very fast understanding of what you have in front of you and supporting them to shift what the experience will be. You need a lot of sensitivity and compassion.”

In practice, this means members receive personalized attention from the moment they arrive. The hospitality team greets them at the door, brings coffee before they’re seated, and provides comprehensive onboarding on how the space works – all within the first ten minutes.

“Someone who walks in to use a lounge for the day gets such personalized attention,” notes Steve Tillotson, Vallist’s Sales Director. “That time and personal attention to detail that our hospitality staff takes with each member has been impressive.”

This high-touch approach stands in deliberate contrast to the self-service, technology-forward operations that many operators have adopted. “With AI generation and everything automated, there is a lot of neglecting the first image,” Ambrosio observes. “What Vallist pushes is: you come here and we will do it for you. You are just here to be productive and we’ll do the rest.”

The Market Responds

Early results suggest professionals are hungry for this level of quality. Passler reports that demand for premium flexible workspace is accelerating faster than anticipated.

“The expectations of what people are looking for are growing tremendously,” Passler notes. “That’s probably the biggest surprise over the last month, which is great for us because obviously we fit into that bracket.”

The work club membership – Vallist’s alternative to traditional hot-desking or co-working – has gained traction particularly quickly. With 24/7 access at competitive launch pricing, members gain what Ambrosio describes as “a curated, smaller scale, boutique environment” that won’t become overcrowded.

“A lot of people are used to going into co-work spaces that open, become super popular, get overpopulated, and then everyone gets fed up,” Tillotson explains. “The promise we’ve been making is that we won’t let it get to that state.”

Investing Where It Matters

The landlord partnership model enables investments that would damage traditional flex economics. At Finlaison House, specifications include robust cybersecurity appropriate for professionals handling sensitive information, comprehensive soundproofing, and hospitality infrastructure that prioritizes human interaction over automation.

“We’ve invested in areas which other flex operators don’t invest in because for most businesses, it damages the economics,” Passler notes. “Where the building is located, we’re surrounded by some of the largest law firms in the world, right behind the Royal Courts of Justice. Their demands for secure networks are pretty extreme, so we’re catering for that level of member.”

In a market still finding its footing after years of turbulence, that patient, quality-first approach may prove to be the competitive advantage that matters most.

About Vallist

Vallist delivers premium flexible workspace through landlord partnerships that eliminate lease risk and enable patient investment in design, technology, and hospitality. Founded by former WeWork executive Alex Passler, Vallist creates hospitality-led environments for professionals who prioritize quality, privacy, and genuine service. Learn more at vallist.com.

Top Reputation Management Tools to Safeguard Your Brand in 2026

In 2026, a brand’s digital footprint is more than just a marketing asset—it is its primary survival mechanism. As AI-generated content and hyper-fast social algorithms dominate the web, the ability to monitor and influence public perception in real-time has become the ultimate competitive advantage.

Staying ahead of the conversation requires tools that offer more than just basic keyword tracking. Here are the top 5 tools defining reputation management this year:

1. BrandMentions: The Gold Standard for Real-Time Monitoring

While many tools focus on a single niche, BrandMentions remains the most comprehensive solution for dedicated reputation management. It doesn’t just skim the surface; it crawls blogs, news sites, forums, and social networks to ensure you are aware of every conversation. In 2026, its AI-powered sentiment analysis is unrivaled, allowing brands to distinguish between casual chatter and potential crises within seconds.

Key Features:

- Real-Time Alerts: Immediate notifications across the web and social media.

- Sentiment Tracking: Sophisticated categorization of mentions to prioritize responses.

- Competitor Spying: Uncover the strengths and weaknesses of rivals in real-time.

2. Podium: The Local Engagement Specialist

For businesses that rely heavily on local trust and direct customer communication, Podium is a powerhouse. It focuses on centralizing customer interactions and review management, making it easy for regional brands to collect feedback via SMS and manage their Google and Yelp ratings from a single dashboard.

3. HubSpot: The Integrated CRM Monitor

HubSpot continues to be a favorite for marketing teams that want their reputation data tied directly to their sales funnel. By integrating social monitoring into its broader CRM ecosystem, HubSpot allows users to see exactly how social sentiment impacts lead generation and customer retention.

4. Google Alerts: The Essential Web Monitor

Google Alerts remains the industry’s most accessible “set-it-and-forget-it” tool. While it lacks the deep social analytics of a dedicated platform, it is an essential free resource for monitoring the indexed web, ensuring you never miss a major news headline or blog post mentioning your brand.

5. AnswerThePublic: The Consumer Intent Explorer

Modern reputation management is as much about understanding “why” people talk as it is about “what” they say. AnswerThePublic listens to search engine data to visualize the questions and concerns customers have. It’s an invaluable tool for brands looking to proactively address common pain points before they turn into negative reviews.

In 2026, the traditional marketing “monologue” has officially been replaced by a global, AI-mediated dialogue. Your brand is no longer just what you say it is in a polished advertisement; it is the sum of every review, forum mention, and AI-generated summary across the digital web.

As we’ve seen, reputation management in this era is not a defensive chore-it is a high-stakes growth strategy. Whether it’s the real-time vigilance of BrandMentions or the strategic content insights of other apps, the tools you use are your eyes and ears in a market that never stops talking.

Managing your brand’s reputation is about building a buffer of goodwill. When you listen to your audience and engage authentically, you aren’t just protecting a name, you’re building a resilient, human-centric business that can thrive in any digital climate.

Company Details

Company name: BrandMentions

Contact person name:- Cornelia Cozmiuc

Contact no: +40758395544

Address: Chimiei 2

City: Iasi

County: Iasi

Country: Romania

Mail: cornelia.cozmiuc@brandmentions.com

Website: https://brandmentions.com/

Top 5 Diamond Engagement Ring Buying Mistakes to Avoid: Expert Guide from Hatton Garden

I’ve been helping couples choose diamond engagement rings for over a decade, and honestly, it breaks my heart when I see someone make a costly mistake that could have been easily avoided.

Whether it’s in my current showroom at Mouza or during my years working in other Hatton Garden stores, I’ve watched too many couples focus on the wrong things or overlook crucial details that really matter.

The thing is, buying an engagement ring shouldn’t feel overwhelming or stressful. Yes, it’s a significant purchase both emotionally and financially, but with the right guidance, you can navigate this process confidently and end up with something truly special that you’ll treasure for decades.

I want to share the five most common mistakes I see couples make, not to scare you, but to help you avoid them entirely.

Think of this as friendly advice from someone who genuinely wants you to have the best possible experience and outcome.

After all, this diamond ring is going to be part of your daily life for years to come, so let’s make sure we get it right from the start.

Diamond Engagement Ring Mistake 1: Focusing on Carat Weight Instead of Diamond Measurements Here’s What Usually HappensYou know what I hear almost every day? “I want a one-carat natural diamond.” And I completely understand why there’s something appealing about that round number, isn’t there?

But here’s the thing that might surprise you: carat weight is actually one of the least important factors when it comes to how impressive your diamond will look.

I’ve had countless clients walk into our Hatton Garden showroom absolutely convinced they need that magical “one carat,” only to fall head over heels for a 0.90-carat stone that looks bigger and more brilliant than the 1.10-carat diamond sitting right next to it. It happens more often than you’d think!

Why Diamond Size Measurements Matter More Than Carat WeightLet me explain this in a way that actually makes sense.

Carat weight is just mass; it’s like weighing two different-shaped boxes and expecting them to take up the same amount of space on your shelf.

A diamond whether natural or lab grown can hide a lot of its weight in places you’ll never see, like a deep bottom or thick girdle.

What you actually see when you look at a ring is the diamond’s face-up size its length and width measurements.

I always tell my customers to think of it like buying a television.

You don’t ask for “a 5-kilogram TV,” do you? You ask for a 55-inch screen because that’s what you’ll actually be looking at.

Here’s a perfect example: a round brilliant diamond measuring 6.5mm across will look noticeably larger than one measuring 6.2mm, even if they weigh exactly the same.

And if you’re looking at fancy shapes like ovals or emeralds? The length-to-width ratio becomes even more important for getting the look you’re after.

The Money-Saving Secret for Natural Diamond Engagement RingsWant to know something that could save you hundreds of pounds?

Natural Diamonds just under the popular carat weights like 0.90ct instead of 1.00ct or 1.85ct instead of 2.00ct often cost significantly less whilst looking virtually identical.

It’s one of the best-kept secrets in the diamond world, and I’m happy to share it with you.

If you’re looking for lab-grown diamond rings, this approach becomes even more valuable because you can put those savings towards better cut quality or a more beautiful setting. It’s all about getting the most visual impact for your budget.

What I’d Do If I Were YouNext time you’re looking at diamonds, ask to see the exact measurements and compare stones with similar dimensions but different weights.

You’ll be amazed at how much more sense it makes when you focus on what you can actually see rather than what the scales say.

And please, consider your partner’s hand size and style. A diamond that looks perfect on one person might feel overwhelming or underwhelming on another.

The goal is finding measurements that create the visual impact you’re dreaming of, regardless of the specific carat weight needed to get there.

Engagement Ring Mistake 2: Choosing Delicate Bands Without Proper Depth The Delicate Band Trend and Its Hidden ProblemsI completely understand the appeal of delicate, minimalist bands they’re absolutely gorgeous and very on-trend right now.

But I’ve seen too many heartbroken clients come back to me with bent, uncomfortable, or damaged rings because they focused only on how thin the band looked from above.

Here’s what most people don’t realise: there’s a huge difference between band width (what you see from the top) and band depth (the thickness from top to bottom).

You can have a band that looks appropriately delicate from above but is actually dangerously thin when you look at it from the side.

Why Band Depth Matters More Than Width for Engagement RingsAfter years of experience and seeing what works (and what doesn’t), I always recommend a minimum band depth of 1.85mm for diamond engagement rings that will be worn daily.

I know that might sound technical, but trust me on this one it’s the difference between a ring that lasts a lifetime and one that causes problems within months.

Think about it this way: your engagement ring is going to be on your finger every single day, through hand washing, exercising, sleeping, and all of life’s little bumps and knocks. A band that’s too thin will bend out of shape, feel sharp against your other fingers, and might not even hold the diamond setting securely.

Finding the Perfect Balance for Diamond Engagement RingsThe good news is that you can absolutely achieve that delicate, refined look whilst still having a structurally sound ring.

It’s all about the proportions. A band that’s 2.0mm wide with 1.85mm depth will feel completely different from one that’s 1.8mm wide with 2.2mm depth, even though both are perfectly strong.

At Mouza, we spend a lot of time getting these proportions just right for each design. Our London workshop allows us to be really precise about these measurements, ensuring your ring looks exactly how you want it whilst meeting our durability standards.

My Honest Advice on Engagement Ring Band SelectionWhen you’re trying on diamond rings, don’t just look at them from above. Ask to see the side profile and understand the complete dimensions. Try on rings with different depth measurements so you can feel the difference in comfort and substance.

Remember, this ring is going to be with you for decades. I’d much rather you choose something that feels substantial and comfortable than something that looks perfect in photos but becomes a source of worry or discomfort in daily life.

Trust me, you’ll thank yourself later for prioritising long-term satisfaction over momentary aesthetic preferences.

Mistake 3: Not Considering Work and Daily Activities When Choosing Diamond Engagement Rings The Showroom vs. Real Life RealityYou know what I see all the time?

Couples who fall in love with a ring in a showroom or online but haven’t thought about how it’ll work in their actual daily life.

I get it when you’re caught up in the excitement and romance of choosing diamond engagement rings, it’s easy to focus purely on how beautiful something looks under a lovely lighting or on a model hand.

But here’s the thing: your partner is going to wear this ring every single day, through work, exercise, cooking, cleaning, and everything else life throws at them.

What looks stunning online or under spot lights might become impractical or even problematic in their real-world routine.

Different Professions Require Different Engagement Ring StylesI’ve learned so much about different professions over the years! Healthcare workers often need rings that can handle frequent hand washing and glove wearing.

Teachers might prefer lower profiles that won’t catch on papers or distract in the classroom. If your partner works with their hands whether they’re a mechanic, chef, or craftsperson we need to think about safety and practicality.

Even office workers have considerations. Does your partner type a lot? A high setting might catch on keyboards. Are they in client-facing roles where the ring needs to look elegant but not ostentatious? These details matter more than you might think.

Active Lifestyles and Engagement Ring DurabilityIf your partner is active running, cycling, rock climbing, or just loves the gym we need to think about security and comfort during movement.

I’ve seen too many people worry constantly about their rings during activities they love, and that’s not what we want.

Musicians have unique needs, too. Pianists need rings that won’t interfere with their finger movement, whilst string players have to consider how settings might affect their technique. Even frequent travellers might prefer lower-profile designs that are less likely to catch on luggage or clothing.

Matching Engagement Ring Style to PersonalityBeyond the practical stuff, the ring should really reflect who your partner is. If they gravitate towards minimalist design in their clothes, home, and other choices, they’ll probably prefer clean, simple ring designs.

If they love ornate details and aren’t afraid of statement pieces, we can explore more elaborate options.

Think about their existing jewellery too. A ring that clashes with their personal style will likely spend more time in the jewellery box than on their finger, and that’s not what we’re aiming for.

How to Choose the Right Engagement Ring for Your LifestyleThe best approach?

Pay attention to your partner’s daily routine, or better yet, have an honest conversation about their preferences and practical needs.

I always encourage couples to think beyond the proposal moment to consider how the ring will function in their everyday life.

At Mouza, we love having these conversations because they help us guide you towards something that’ll bring joy every single day, not just on special occasions. After all, the perfect engagement ring is one that enhances your life together rather than creating any worries or limitations.

Mistake 4: Not Researching Engagement Ring Warranty and Service Options The Hidden Costs of Poor Engagement Ring AftercareHere’s something that really frustrates me: couples who spend months researching the perfect Hatton Garden engagement rings, comparing every detail of the diamonds and settings, but never ask about what happens after the purchase.

I’ve seen people save a few hundred pounds upfront only to spend thousands more over the years on basic services that should have been included.

Your engagement ring isn’t a “buy it and forget it” purchase. Over the decades you’ll wear it, it’ll need cleaning, occasional adjustments, maybe some repairs, and definitely some maintenance to keep it looking its best.

The quality of aftercare can make or break your long-term satisfaction with your purchase.

What Comprehensive Engagement Ring Aftercare Should IncludeLet me tell you what proper aftercare looks like, because I think you deserve to know. At Mouza Hatton Garden, we’ve built our entire business model around the idea that buying a ring is just the beginning of our relationship with you.

We offer a free lifetime warranty that covers everything not just manufacturing defects, but even accidental damage.

I know that sounds almost too good to be true, but we mean it.

Life happens, and we don’t think you should have to worry about the financial consequences of everyday accidents.

Our lifetime cleaning and servicing means your ring will always sparkle like the day you first saw it.

We never charge for resizing, understanding that bodies change over time whether it’s seasonal fluctuations, pregnancy, or just the natural changes that come with life.

Here’s something that sets us apart: we never charge for repairs. Ever. Whether it’s a loose prong, a worn setting, or minor damage from daily wear, our skilled craftspeople will take care of it without any additional cost to you.

We also provide free valuation certificates whenever you need them, which is crucial for insurance purposes and gives you peace of mind about your investment.

Why Engagement Ring Aftercare Matters More Than You ThinkI’ve had clients come to me from other jewellers, frustrated and out of pocket because they were charged for basic services or told their warranty didn’t cover “normal wear.” That’s just not right in my opinion.

When you know your ring is fully protected and maintained, you can actually enjoy wearing it without constantly worrying about potential costs or damage.

That peace of mind is priceless and really enhances your daily experience with the piece.

Questions to Ask About Engagement Ring WarrantiesBefore you make your final decision anywhere, ask detailed questions about aftercare policies. What’s covered? What isn’t? Are there any hidden fees? What happens if you move away from the area?

Some jewellers offer great initial prices but charge for every little service afterwards. Others have warranties that sound comprehensive but are full of exclusions that leave you vulnerable when you actually need help.

Mouza’s Lifetime Engagement Ring Protection PromiseAt Mouza, we believe that choosing an engagement ring should be the start of a lifelong relationship built on trust and support.

Our comprehensive aftercare isn’t just about protecting your investment it’s about ensuring that your ring continues to bring you joy and confidence every single day.

When you choose us, you’re not just buying a ring; you’re gaining a partner who’ll be there for every milestone, every maintenance need, and every question that comes up along the way. That’s the kind of service I wish every couple could experience.

Mistake 5: Delaying Engagement Ring Insurance Coverage The “I’ll Sort Out Insurance Later” TrapI wish I could tell you how many times I’ve had this conversation: a couple comes in, devastated because they’ve lost their uninsured engagement ring, and now they’re facing the reality of replacing it at current market prices.

It’s honestly one of the worst parts of my job, because it’s so easily preventable.

I get it after spending months choosing the perfect diamond engagement ring and planning the proposal, insurance feels like just another boring task on the to-do list.

But please, please don’t put this off. The peace of mind alone is worth it, never mind the financial protection.

Why Standard Home Insurance Isn’t Enough for Engagement RingsHere’s something that surprises most people: your standard home or renters insurance probably covers jewellery for only £1,000-£2,500 total.

So if you’ve invested £5,000 in a beautiful ring, you’d only get partial compensation if something happened. That’s a pretty significant gap, isn’t it?

Even worse, most standard policies don’t cover things like “mysterious disappearance” (which is insurance speak for “I have no idea where it went”), damage from normal wear, or stones falling out of settings. These are actually quite common issues with engagement rings, so you’d be left without coverage exactly when you need it most.

What Proper Engagement Ring Insurance Coverage IncludesSpecialised jewellery insurance is completely different.

It covers theft, loss, damage, and yes, even mysterious disappearance.

You get replacement cost coverage, which means you can get a ring of similar quality regardless of how prices have changed since your original purchase.

Many policies even cover damage from normal wear things like loose prongs or bent settings that can happen over time.

This bridges the gap between warranty coverage and insurance, so you’re protected no matter what happens.

The worldwide coverage is brilliant too, especially if you travel. Your ring is protected whether you’re at home, on holiday, or anywhere else in the world.

How to Get Engagement Ring Insurance QuicklyStart the process as soon as you’ve made your purchase decision, ideally before you even take the ring home.

Most insurers need detailed appraisals and documentation, and coverage doesn’t typically start until everything’s in place.

When comparing insurers, pay attention to coverage limits, deductibles, and exclusions. Some companies specialise in jewellery and often offer better terms than general insurance providers.

The Peace of Mind That Comes with Proper CoverageHere’s what I love about proper insurance: it lets you actually enjoy your ring without constant worry. You can wear it confidently, knowing that if something unexpected happens, you’re protected.

I’ve seen couples who are so worried about their uninsured rings that they barely wear them, keeping them locked away for “special occasions.” That’s not what an engagement ring is for! It should be part of your daily life, and proper insurance makes that possible.

My Personal Recommendation for Ring InsuranceDon’t wait. Seriously. Get quotes from a few specialised jewellery insurers as soon as you’ve chosen your ring.

The annual cost is typically quite reasonable compared to the value of your ring, and the protection it provides is invaluable.

Keep detailed records of everything purchase receipts, valuation cert, photos.

This documentation will be crucial if you ever need to make a claim, and it helps establish the history and value of your piece.

Trust me, the small annual premium is nothing compared to the devastating cost of replacing an uninsured ring.

It’s one of those things where you’ll never regret having it, but you’ll definitely regret not having it if something goes wrong.

Conclusion: Making Smart Engagement Ring DecisionsI hope sharing these insights helps you feel more confident about your engagement ring journey.

The truth is, avoiding these five mistakes isn’t complicated once you know what to look for – it’s just about shifting your focus to the things that really matter for long-term happiness and value.

Remember: prioritise diamond measurements over carat weight, ensure your band has proper depth for durability, think about real-life wearability, investigate aftercare services thoroughly, and get proper insurance coverage from day one. These aren’t just technical considerations they’re the foundation of a ring that’ll bring joy for decades to come.

What I love most about my work is helping couples navigate this process with confidence rather than confusion. We’ve built everything around the idea that buying an engagement ring should be exciting and enjoyable, not stressful or overwhelming.

Your engagement ring is going to be part of your daily life for years and years. It’ll be there for all the big moments and the quiet everyday ones, too. By making informed decisions now focusing on quality, practicality, and proper protection you’re ensuring that this special piece will continue to make you smile every time you look at it.

About the Author: Amr Ramadan DGA is the Founder of Mouza Fine Jewellery and a diamond researcher who studied at Gem A, London. With years of experience helping couples in Hatton Garden showrooms, Amr is passionate about guiding people towards engagement ring choices that bring lasting happiness and value

Illuminating Interior Design: Elevate Every Space with Thoughtful Lighting

Lighting is more than a functional necessity—it is the soul of interior design. The right lighting transforms spaces, enhances architecture, and creates atmosphere. From dramatic Vorelli Staircase Chandeliers to minimalist floor lamps, curated lighting choices define how a home looks, feels, and flows.

Chandeliers: The Statement of Elegance

Vorelli Chandeliers remain the ultimate symbol of luxury and sophistication. Whether classic crystal or modern sculptural designs, chandeliers anchor a room and draw the eye upward. In living rooms and dining areas, they create a focal point that instantly elevates the space, reflecting light beautifully and adding a sense of grandeur.

Floor Lamps: Style Meets Function

Vorelli Floor lamps offer flexibility without compromising style. Perfect for living rooms, bedrooms, and reading corners, they add layered lighting while introducing texture and character. From sleek contemporary designs to bold artistic pieces, floor lamps enhance comfort while making a subtle design statement.

Wall Lights: Architectural Accents

Wall lights add depth and dimension to interiors. Used to highlight artwork, create ambient glow, or frame architectural features, they bring balance to lighting schemes. In hallways, bedrooms, and bathrooms, wall lights deliver both practicality and refined visual appeal.

Ceiling Lights: Clean, Modern Illumination

Ceiling lights provide essential general lighting while maintaining a clean aesthetic. Recessed, flush, or semi-flush designs work seamlessly in modern homes, ensuring even illumination without overpowering the décor.

Kitchen Lighting: Where Design Meets Precision

In the kitchen, lighting must be both beautiful and functional. Vorelli ceiling lights provide overall brightness, while targeted lighting enhances work areas. Well-placed fixtures ensure clarity for cooking while contributing to a polished, contemporary look.

Staircase Long Chandeliers: Vertical Drama

A long chandelier cascading through a staircase transforms transitional spaces into architectural showpieces. These dramatic fixtures emphasise height, movement, and elegance, turning staircases into unforgettable design moments.

Breakfast Bar Pendant Lights: Modern and Inviting

Vorelli pendant lights above breakfast bars combine practicality with personality. They define the space, provide focused lighting, and create an inviting atmosphere for casual dining and social moments. Whether minimalist or decorative, pendants add rhythm and balance to open-plan layouts.

Dining Room Chandeliers: Setting the Mood

Dining room lighting sets the tone for every gathering. A well-chosen Vorelli chandelier above the table creates intimacy, warmth, and visual harmony. Crystal chandeliers, in particular, bring timeless elegance and sparkle, enhancing both everyday meals and special occasions.

Hallway Crystal Chandeliers: A Grand Welcome

Hallways deserve just as much attention as main living spaces. Crystal chandeliers in hallways create a striking first impression, guiding guests through the home with light, luxury, and refined charm.

Light That Defines Living

From kitchens to staircases, hallways to dining rooms, the right combination of chandeliers, floor lamps, wall lights, and ceiling lights brings interiors to life. Thoughtfully designed lighting doesn’t just illuminate—it tells a story, elevates design, and turns houses into homes. For more information on our Chandelier and Lighting designs visit www.vorelli.co.uk

Mefron Technologies Secures Its First Private Equity Investment from Motilal Oswal Principal Investments and India SME Investments

Mefron Technologies, an electronics design and manufacturing services (EMS) company, has secured its first private equity investment from Motilal Oswal Principal Investments and India SME Investments. The funding will be used to expand manufacturing capacity, strengthen automation-driven processes, and accelerate growth in European and North American markets.

Mefron Technologies, an electronics design and manufacturing services (EMS) company, has raised its first private equity round for an undisclosed amount from Motilal Oswal Principal Investments and India SME Investments.

Founded in 2022, Mefron delivers comprehensive end-to-end manufacturing solutions encompassing PCB assembly, tooling, plastic injection moulding, box build, and cable and wire harness manufacturing. The company serves multiple OEM segments and operates under internationally recognised quality standards, including ISO 9001, ISO 13485 for medical electronics, and IATF 16949 for the automotive industry.

“The company aims to leverage its high level of automation using principles of Industry 5.0 and proprietary software for various operations to achieve higher yields and timely delivery, two key problems plaguing Indian EMS companies,” said the company’s founder and Director, Hiren Bhandari.

Both Motilal Oswal Principal Investments and India SME Investments bring strong domain expertise and a proven track record in supporting niche and scalable manufacturing businesses. Motilal Oswal has previously invested in leading EMS companies such as Dixon Electronics and VVDN, while India SME Investments has backed manufacturing and infrastructure-led businesses including Simpolo Ceramics, SBL Energy, and Venus Pipes & Tubes.

“The confidence and trust shown by such notable investors underlines Mefron’s capabilities and potential to become a leader in Indian electronics manufacturing,” said founder and Director, Bhavyen Bhandari.

Highlighting the sector outlook and Mefron’s strategic differentiation, Mitin Jain, Founder and Managing Director of India SME Investments, said, “While the tailwinds are strong for entire electronics manufacturing through various government policies, local ecosystem development, and huge market opportunity, Mefron with its strong and proven design capabilities differentiates from many players in this segment as without ODM capability, companies face margin pressure.”

Mefron serves leading OEM customers across grooming and personal care devices, mobile accessories, access control systems, biometric devices, and electric vehicle applications. The company exports its products to more than 30 countries and operates subsidiaries in China and Singapore to support global sourcing and supply-chain management.

The newly raised capital will be utilised to expand manufacturing capacity, strengthen advanced automation-driven processes, and scale business development initiatives. Mefron also plans to accelerate its international growth strategy, with a focused push into the European and North American markets.

Company Information

Company: Mefron Technologies (India) Private Limited

Contact Person: Robin Bunker

Email: sales@mefron.com

Country: India

City: Ahmedabad

Website: https://www.mefron.com/

How to Choose an Gen AI Consulting Company: Checklist, RFP Questions & Scoring

Something is already happening inside your org: a leader asked for an Gen AI plan, a team shipped a flashy demo, and now reality has hit, data isn’t clean, access isn’t simple, security has questions, and nobody can clearly say who owns the model after go-live.

If you’ve been stuck in that loop, it usually sounds like this:

- “We proved it works… but we can’t deploy it.”

- “We don’t trust the outputs enough to automate decisions.”

- “Everything breaks when we try to connect it to real systems.”

- “The vendor says ‘2 weeks’, but can’t explain monitoring, rollback, or governance.”

This guide is written for that exact moment.

In the next few minutes, you’ll get a practical, production-first checklist to choose a Gen AI consulting partner, based on what actually makes Gen AI succeed after the demo: integration, monitoring, security, governance, and clear ownership. No theory. No hype. Just the criteria that helps you shortlist firms.

Decide if you need Gen AI consulting or not

Before you start comparing firms, pause for a second and answer one thing honestly:

Are you looking for advice, or are you trying to get something into production without breaking systems, compliance, or timelines?

Because “Gen AI consulting” means very different things depending on where you are right now.

You likely need a Gen AI consulting partner if…

1) Your Gen AI work keeps stalling after the demo

If pilots look good but stop at “we’ll scale it later,” the blocker is usually not the model. It’s the messy middle: data access, integration, approvals, monitoring, and ownership.

2) You need Gen AI to work inside real systems (not in a sandbox)

If your use case touches ERP/CRM/ITSM tools, customer data, payments, tickets, claims, healthcare records, or regulated workflows, you’re dealing with integration and controls, not just prompts and prototypes.

3) Security, privacy, or compliance will get involved (and they should)

If you already hear questions like:

- “Where will data be stored?”

- “Who can access the model outputs?”

- “How do we audit decisions?”

- …you need a partner that can design with governance, not add it later.

4) You don’t have a clear “owner” after go-live

If there’s no plan for who monitors performance, handles incidents, retrains models, and owns outcomes, production Gen AI becomes a permanent escalation path.

You may not need Gen AI consulting if…

1) You have a mature data + engineering foundation already

You’ve got stable pipelines, clear data ownership, monitoring, and a team that can deploy and maintain models without vendor dependency.

2) Your scope is small and internal

You’re exploring low-risk, internal productivity use cases where failure won’t trigger compliance, customer impact, or operational downtime.

Quick self-check (answer yes/no)

If you say “yes” to 2 or more, consulting is usually worth it:

- Do we need this Gen AI use case to run inside core systems?

- Will security/compliance need sign-off before go-live?

- Have previous pilots stalled due to production constraints?

- Do we lack clear ownership for monitoring + incident response?

If that sounds like your situation, keep going, because the next sections will help you choose the right type of Gen AI consulting firm, not just a popular one.

Define success before you evaluate firms (your “scope lock”)

If you skip this step, every vendor will sound “perfect.”

Because when success isn’t defined, a polished demo can feel like delivery.

So before you compare companies, lock three things, outcome, proof, and production conditions. This is what SMEs do first because it prevents you from buying capability you don’t need (or missing what you do).

1) Start with the business outcome (not the model)

Ask: What do we want to improve, measurably, in the next 90–180 days?

Examples (use the language your leaders already care about):

- Reduce cycle time (claims, tickets, onboarding, reconciliations)

- Increase straight-through processing / automation rate

- Reduce manual touches per case

- Improve decision accuracy (fraud flags, triage routing, forecasting)

- Cut operational cost or backlog

- Reduce risk exposure (audit findings, policy violations)

Instruction for this section: write 2–3 example outcomes relevant to your audience and add the KPI to measure each.

2) Decide what proof you will accept (so you don’t get trapped by “it works”)

This is where most teams get burned. Vendors say “it works,” but you need evidence that it works in your reality.

Define proof like this:

- Accuracy / quality: what “good output” means (precision/recall, error rate, acceptance rate)

- Reliability: what happens when inputs change, APIs fail, or data arrives late

- Speed: latency requirements if it’s real-time (payments, fraud, triage)

- Business impact: what metric moves because of it (not just “better insights”)

Instruction: include a mini template:

- Outcome:

- KPI:

- Proof we’ll accept:

3) Define “production conditions” (the part that separates real delivery from pilots)

A solution isn’t production-ready just because it runs. It’s production-ready when it can survive:

- system integration,

- security reviews,

- ongoing monitoring,

- incidents,

- and ownership after go-live.

Lock these conditions early:

- Where it runs: cloud/on-prem/hybrid, tenant boundaries

- What it touches: systems + data domains (ERP/CRM/ITSM/customer/PII)

- Who owns it: operations model post go-live (monitoring, retraining, incidents)

- Controls: access control, audit trail, approvals, rollback plan

- Total cost: not just build cost, running + monitoring + change management

Instruction: write this as a short “Production Readiness Checklist” with 6–8 bullets.

Quick readiness triage (so you don’t hire the wrong type of partner)

This isn’t a full “Gen AI maturity assessment.” It’s a fast triage SMEs use to avoid a common mistake:

Hiring a firm that’s great at demos when your real blocker is data, integration, security, or ownership.

Take 10 minutes and check these five areas. You’re not trying to score yourself, you’re trying to identify what kind of partner you actually need.

1) Data readiness: can you feed Gen AI with something trustworthy?

Ask yourself:

- Do we know where the required data lives (and who owns it)?

- Can we access it without weeks of approvals and one-off scripts?

- Is the data consistent enough to make decisions (not just generate summaries)?

- Do we have basic definitions aligned (customer, claim, ticket, transaction)?

Green flags (good sign):

- Named data owners + documented sources

- Consistent identifiers and a clear “source of truth”

- Known quality checks (even if imperfect)

Risk signal (you need stronger help here):

- Teams don’t trust reports today

- Data is spread across tools with no clear ownership

- You rely on manual exports to “make things work”

2) Integration reality: will this need to run inside core systems?

AI that sits outside operations becomes another dashboard nobody uses.

Ask:

- Does the output need to trigger action inside ERP/CRM/ITSM/workflow tools?

- Will it write back to systems or just “recommend”?

- Do we have APIs/events available, or are we dealing with legacy constraints?

Green flags:

- APIs exist, workflows are known, integration owners are involved

Risk signal:

- “We’ll integrate later” is the plan

- (That’s how pilots die.)

3) Security + privacy: are you prepared for the questions you’ll definitely get?

If your use case touches customer data, regulated data, or business-critical decisions, security will ask the right questions, early.

Ask:

- What data can be sent to models, and what must stay internal?

- Who is allowed to view outputs (and are outputs sensitive too)?

- Do we need audit trails for prompts/inputs/outputs/decisions?

- Do we have a policy for vendor tools and model providers?

Green flags:

- A clear stance on data boundaries + access controls

- Security is already involved

Risk signal:

- “We’ll figure it out after the PoC”

- (That usually becomes a hard stop.)

4) Operating model: who owns it after go-live?

This is the silent killer. If there’s no owner, AI becomes permanent.

Ask:

- Who monitors accuracy, drift, and failures?

- Who handles incidents and rollbacks?

- Who approves changes (data changes, model updates, prompt updates)?

- Who is accountable for outcomes in the business?

Green flags:

- Named owners + escalation path + release process

Risk signal:

- “The vendor will manage it” with no internal role clarity

5) Adoption reality: will people actually use it in the workflow?

Even great Gen AI fails if it doesn’t fit how work is done.

Ask:

- Does this replace a step, reduce time, or reduce risk in a real workflow?

- Will frontline teams trust it enough to act on it?

- Have we defined where humans review vs where automation is allowed?

Green flags:

- A clear “human-in-the-loop” decision point

- Training and workflow updates included

Risk signal:

- The plan is “we’ll just show them the tool”

What this triage tells you (and how to use it)

- If you flagged data + integration – you need a partner strong in data engineering + systems integration (not just model building).

- If you flagged security + governance – you need a partner that designs for controls, auditability, and risk management from day one.

- If you flagged operating model + adoption – you need a partner that can deliver enablement, ownership, and production operations, not just a build team.

Now that you’ve identified your real gaps, the next section is where you’ll get the evaluation checklist that predicts success, the exact criteria to compare firms without getting misled by demos.

The evaluation checklist that actually predicts success (production-first)

At this stage, don’t ask, “Who are the best Gen AI consulting companies?”

Ask: “Which firms can deliver our use case into production, inside our systems, under our controls, without creating a permanent dependency?”

SMEs evaluate partners using capability buckets that mirror real delivery. Below is the checklist. Use it exactly like a scorecard: each bucket includes what good looks like, what proof to ask for, and red flags that usually mean the project will stall.

1) Use-case discovery & value framing (do they start with outcomes?)

What good looks like

- They translate your idea into an operational workflow and define measurable KPIs.

- They can explain where AI fits, where humans review, and what changes in the process.

Proof to ask for

- A sample use-case brief: “problem – workflow – KPI – success criteria”

- A value scoring method (value vs feasibility vs risk)

Red flags

- They jump to tools/models before clarifying workflow and KPI.

- “We can do everything” but can’t explain what they’d do first.

2) Data readiness & engineering capability (can they work with messy reality?)

What good looks like

- They diagnose data gaps quickly and propose pragmatic fixes: quality checks, reconciliation, schema handling.

- They can explain how they’ll prevent “silent failures” when sources change.

Proof to ask for

- Example of a data readiness checklist or data quality monitoring approach

- A sample data pipeline/validation plan (even high level)

Red flags

- They assume clean data or request perfect datasets upfront.

- No mention of data ownership, lineage, or validation.

3) Architecture & integration (can it run inside your ecosystem?)

What good looks like

- They speak in integration patterns: APIs, events, queues, workflow triggers, identity/access boundaries.

- They know how to embed AI into ERP/CRM/ITSM processes without breaking them.

Proof to ask for

- An architecture diagram from a past delivery (sanitized is fine)

- Integration approach: where it reads/writes, how failures are handled

Red flags

- “We’ll integrate later” or “just call the model endpoint.”

- No mention of reliability patterns (retry, fallback, circuit-breaking, idempotency).

4) Model / GenAI approach (fit-for-purpose, not overkill)

What good looks like

- They choose the simplest approach that meets requirements (rules + AI, retrieval + LLM, classification, etc.).

- They can explain trade-offs: accuracy vs latency vs cost vs control.

Proof to ask for

- How they evaluate model quality (and what metrics they use)

- Example of prompt/version management or model selection rationale

Red flags

- Overpromising “human-level intelligence.”

- They can’t explain failure modes or when the model is likely to be wrong.

5) MLOps / LLMOps (production lifecycle discipline)

This is where most “great PoCs” die. Production means monitoring, rollback, and controlled change.

What good looks like

- Clear plan for deployment, monitoring, drift checks, retraining/refresh, and rollback.

- They treat the model as a living system with operational ownership.

Proof to ask for

- A monitoring plan: what is monitored, alert thresholds, incident response

- A release approach: how changes are tested and approved

Red flags

- “Once it’s built, it’s done.”

- Monitoring is described as “we’ll watch it manually.”

(High-quality production thinking here aligns with lifecycle patterns commonly emphasized by Google Cloud and AWS.)

6) Security & privacy (data boundaries and access control are non-negotiable)

What good looks like

- They start with your data classification and define what can/can’t go to the model.

- They have a clear approach to identity, access control, logging, and retention.

Proof to ask for

- Security design outline: access control, encryption, logging, retention

- How they handle sensitive data in prompts/outputs

Red flags

- Hand-wavy answers like “we’re secure by default.”

- They can’t explain where data goes, how it’s stored, or who can see outputs.

7) Governance & responsible Gen AI (risk control, auditability, and decision traceability)

What good looks like

- They define governance as a process: roles, approvals, documentation, audit trail.

- They can explain how decisions are traceable: what input led to what output and why.

Proof to ask for

- Sample governance workflow (approvals, documentation, change control)

- How they test for bias/drift and document model behavior

Red flags

- Governance is treated as “a policy deck.”

- No story for audit trail or decision traceability.

(Strong governance framing aligns with NIST risk-based thinking.)

8) Enablement & handover (will your team own it, or stay dependent?)

What good looks like

- They plan the handover from day one: documentation, runbooks, training, ownership model.

- They leave behind artifacts your team can operate confidently.

Proof to ask for

- Sample runbook / SOP (sanitized)

- Training plan and post-go-live support model

Red flags

- Knowledge stays in their heads.

- “We’ll manage it for you” without explaining what you’ll own internally.

10 RFP questions you should ask every Gen AI consulting firm

Use these questions as your “truth filter.” They’re designed to expose whether a firm can deliver production-grade Gen AI (inside real systems, under real controls) or whether you’re about to buy another polished pilot.

A) Delivery proof (can they show real outcomes, not just capability?)

- Show us a similar use case that’s in production. What was the workflow, and what KPI moved?

- What a strong answer sounds like: specific workflow + measurable metric + timeline + what they did to achieve it.